Counterfactual Recipe Generation:

Exploring Models’ Compositional Generalization Ability in a Realistic Scenario

About

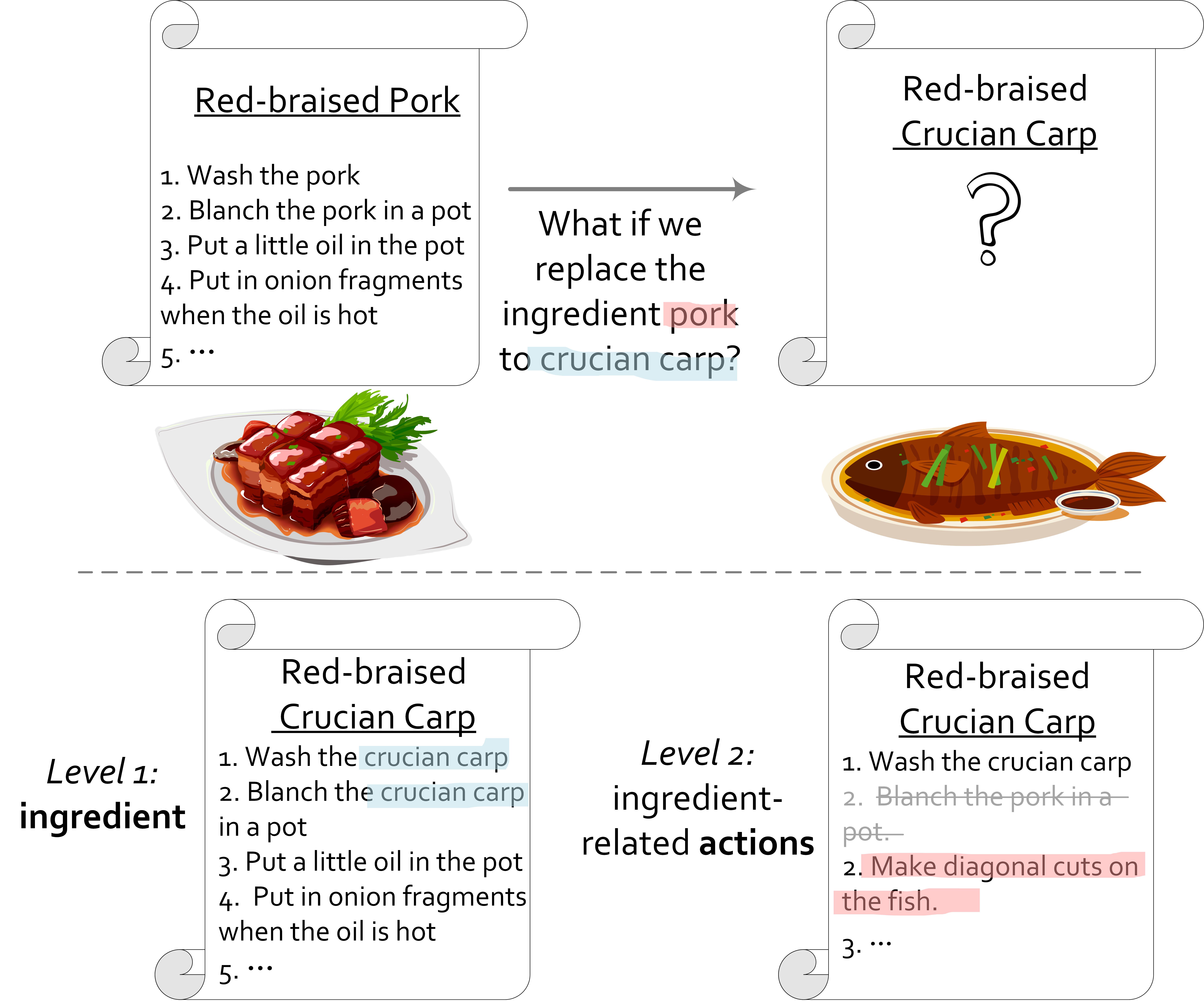

We investigate whether pretrained language models can perform compositional generalization in a realistic setting: recipe generation. We design the counterfactual recipe generation task, which asks models to modify a base recipe according to the change of an ingredient.

This task requires compositional generalization at two levels: the surface level of incorporating the new ingredient into the base recipe, and the deeper level of adjusting actions related to the changing ingredient.

Our results show that pretrained language models have difficulties in modifying the ingredient while preserving the original text style, and often miss actions that need to be adjusted. Although pretrained language models can generate fluent recipe texts, they fail to truly learn and use the culinary knowledge in a compositional way.

Citation

If you find our work helpful, please cite us.

@article{liu2022counterfactual,

title={Counterfactual Recipe Generation: Exploring Compositional Generalization in a Realistic Scenario},

author={Liu, Xiao and Feng, Yansong and Tang, Jizhi and Hu, Chengang and Zhao, Dongyan},

journal={arXiv preprint arXiv:2210.11431},

year={2022}

}

Contact

For any questions, please contact Xiao Liu (lxlisa@pku.edu.cn) or open a github issue. We appreciate the opensource code for website layout made by Micheal Zhang.

Acknowledgement

We would like to thank our great annotators for their careful work, especially Yujing Han, Yongning Dai, Meng Zhang, Yanhong Bai, Li Ju, Siying Li, and Jie Feng.